Default Service Agent Permissions Expose High-Risk Identity Paths

Security researchers have uncovered critical privilege escalation vulnerabilities in Google Cloud’s Vertex AI platform, allowing attackers with minimal permissions to gain control over high-privileged Service Agent accounts. These flaws stem from default configurations within Vertex AI Agent Engine and Ray on Vertex AI, exposing powerful managed identities at the project level.

As enterprises rapidly deploy Generative AI infrastructure, these overlooked identity risks represent a growing threat to cloud security. According to recent industry data, 98% of organizations are currently experimenting with or implementing Generative AI platforms, with Google Cloud Vertex AI being a popular choice.

Understanding the Risk: What Are Vertex AI Service Agents?

Service Agents are Google-managed service accounts used internally by cloud services to perform operations on behalf of users. Unlike user-created service accounts, these identities are automatically provisioned and often receive broad permissions by default, many of which span the entire project.

Because Service Agents operate silently in the background, they are frequently overlooked during security reviews—making them an attractive target for attackers seeking privilege escalation paths.

Identified Attack Vectors in Vertex AI

Researchers identified two distinct attack paths that turn these “invisible” managed identities into exploitable privilege escalation mechanisms.

1. Vertex AI Agent Engine Tool Injection Vulnerability

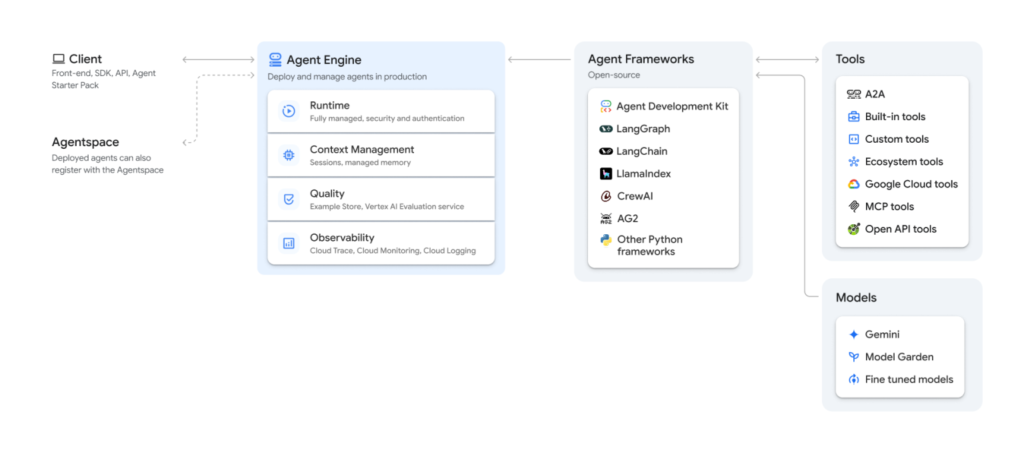

The first vulnerability targets Vertex AI Agent Engine, a service that enables developers to deploy AI agents using frameworks such as Google’s Agent Development Kit (ADK).

Attackers with the relatively low-privileged permissionaiplatform.reasoningEngines.update

can update an existing reasoning engine and inject malicious Python code into a tool definition.

Once triggered, the injected code executes on the reasoning engine’s compute instance. From there, attackers can access the instance metadata service to retrieve credentials for the Reasoning Engine Service Agent.

By default, this Service Agent has extensive permissions, including:

- Access to LLM memory and chat sessions

- Read and write access to Google Cloud Storage buckets

- Logging and monitoring capabilities

This allows attackers to read private conversations, extract sensitive data, and access stored AI artifacts, all while starting from minimal permissions.

2. Ray on Vertex AI: Viewer Access to Root Shell

The second vulnerability affects Ray on Vertex AI, where the Custom Code Service Agent is automatically attached to cluster head nodes.

Researchers found that users with permissions included in the standard Vertex AI Viewer role—such asaiplatform.persistentResources.list

can access the Head Node Interactive Shell directly through the Google Cloud Console.

Despite being a read-only role, this grants attackers root shell access on the cluster head node. From there, they can query the metadata service and extract access tokens for the Custom Code Service Agent.

Although the token has limited IAM scope, it provides:

- Full read/write access to Cloud Storage

- Access to BigQuery datasets

- Pub/Sub interaction

- Read-only visibility across much of the cloud environment

This effectively turns a viewer role into a high-impact compromise path.

When these issues were disclosed, Google responded that the services were “working as intended.” As a result, the identified configurations remain enabled by default, placing responsibility on customers to secure their environments.

This response highlights a broader cloud security challenge: secure-by-default is not guaranteed, especially for complex AI and managed service platforms.

Security Recommendations for Vertex AI Users

Organizations using Google Cloud Vertex AI should take immediate action:

- Replace default Service Agent permissions with custom IAM roles following least-privilege principles

- Disable interactive head node shells where not strictly required

- Validate and restrict tool updates and agent code changes

- Monitor metadata service access using Security Command Center

- Regularly audit Service Agents as part of identity risk assessments

Treat Service Agents as high-risk identities and apply the same scrutiny as human and workload identities.